Flooding in Paris in 2018. Square du Vert-Galant seen from Place du Pont-Neuf. The Institute for Economics and Peace collected data between 1900 and 2019 showing an increase in the number of floods. From 39 in 1960 to 396 in 2019. [1] Institute for Economics Peace. Ecological threat register 2020: Understanding ecological threats. Resilience and Peace, Sydney, September 2020.

For the last two decades, cloud computing has been the linchpin behind most of our daily clicks. In a nutshell, the technique boils down to transporting all the data to a handful of distant giant server facilities where it is first stored and subsequently processed. Only then can the result of this calculus be delivered to the end user. However, this centralized architecture suffers from a number of flaws, not the least of them being bandwidth-related latency. And with the advent of technologies such as the autonomous car or the Internet-of- Things (IOT), this model is no longer cogent.

Verbatim

Certain data may become outdated if not processed right away. Therefore it requires ‘online’ processing, which is faster but calls for a new paradigm

Inria ISFP researcher - STACK Team

This Inria scientist is a member of STACK, a research team[1], laying the groundwork for a novel model that will not just leverage the cloud’s server farms but span the whole computing continuum and harness countless other resources actually available along the network, including a myriad of devices located at the edge.

In this context, Balouek focuses more specifically on urgent science. This could be described as a class of time-critical applications that leverage distributed data sources to facilitate important decision-making in case of natural disasters, extreme events and so on. “In these domains, some applications have been in existence for a long time. They would deserve to be upgraded with today’s technology but unfortunately, in these fields, there might not be the same economic incentives for modernization as one would find in, say, e-commerce for instance.”

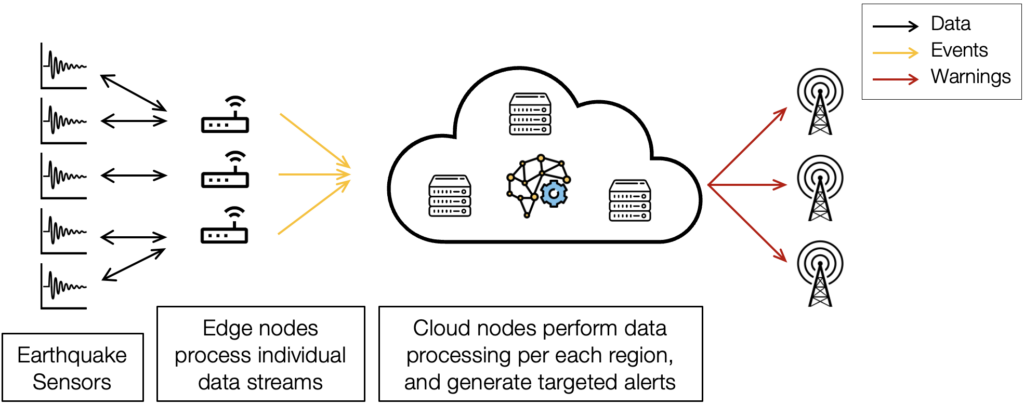

Back in 2020, together with colleagues at the Rutgers Discovery Informatics Institute, Balouek proposed an early earthquake warning application[2] to illustrate the concept and merit of distributed analytics across a dynamic infrastructure spanning the computing continuum. This workflow combined data streams from geo-distributed seismometers and high- precision GPS stations to detect large ground motions[3]. ), and was received with an “Outstanding Paper Award in Artificial Intelligence for Social Impact” in one of the leading international academic conferences in artificial intelligence.

Abstract architecture of the digital continuum for earthquake detection.

Exploiting the continuum implies efficient use of computational resources along the data path, in order to make trade-offs between processing speed and the quality of the results obtained.

Data Processed Close to Sensor Location

The scenario emulated 1,000 sensors deployed on 25 host machines. Each set of 20 sensors composed a geographical region. With each sensor producing 1,200 messages at 100 Hz, no less than 12 million messages had to be handled in the blink of an eye. Instead of being sent to the cloud, this data was first processed close to the sensor location for initial classification. “It allows us to increase the level of trust in the results. Different stations detect various phenomena. A tremor hither. A tremor yon. There is a collective intelligence through which, after detecting these incidents, several stations figure out that they are dealing with a quake. So local detection takes place before the data reaches the cloud for further aggregation.” Incidentally, even if the cloud facility goes out of service as an aftermath of the quake, the application should nevertheless be able to perform detection and adapt dynamically to the resource still accessible.

The research is essentially two-fold. The first aspect deals with resource management: discovering available assets, mapping computations, assembling resource federations, enabling new resources to check in or check out if they want to join or leave a federation, etc.

At the moment, people tend to build one system for earthquakes, another one for floods, and yet another for wildfires. But it would make more sense to reuse existing resources and go for shared platforms. Unfortunately, it is only when an event breaks out that people seek to specialize computing resources and sensors.

"All of a sudden, the scientist is faced with an emergency situation on top of which there is an urgency to marshal resources. Imagine a quake occurs. Who could help me perform computations? Could I re-purpose computers that were originally dedicated to, say, stock management? Could I access and use sensors that were originally dedicated to video surveillance or air pollution? How could powerful resources, reassigned to new task, become an asset to deal with the new situation?”

On such shared platforms, “prioritization becomes crucial. Because someone’s urgency isn’t necessary someone else’s. If a wildfire and a tornado occur simultaneously, decisions must be made. There are priorities to be set according to crisis management’s rules and protocols. We will have to take this notion of hierarchy in consideration and propose visualization tools to help decision making.”

Pair-to-Pair

In this orchestration, Pair-to-Pair mechanisms play an important role. “Think of connected vehicles. Imagine that you lose access to the network linking to the manufacturer’s centralized server in Japan. Thanks to P2P, your car will still be able to receive information shared by vehicles in the surroundings. It will remain in an information bubble.”

New Programming Models

The second aspect of the research regards the programming models designed to ease the use of these resources. “Today, developing an application on the cloud is relatively easy.

Someone with a two-year developer background can go to Amazon Web Services where templates and software abstractions are available to greatly facilitate the work. But it is not as straightforward on the continuum where dynamicity is higher and developers do not own the resources they use. If I built an earthquake detection program that worked fine in France and if I now plan to reuse it in Japan, in a new situation with new resources, the programming model must be smart enough to adapt itself to this context in a quick fashion.”

Another concern revolves around the need to optimize the balance between quality of results and resource usage. “Typically, in the field of video, Full HD cameras deliver a lot of frames per second whereas many applications only need two of three images per second to work from. It will free resources that can be allocated to something else. If we use our algorithms in low granularity, they can work faster or perform more task simultaneously. And we end up having better bandwidth connectivity as well. So there is a whole trade-off to be found between what I want and what I can actually do, depending on my infrastructures or my self-imposed constraints.”

Seeking Collaboration with Urgent Scientists

In furtherance of this research, Balouek is very keen to collaborate with urgent scientists and crisis management experts. “If we are to build these programming models, we must have the users in the loop and work hand in hand with them. So we invite people to bring us their use cases. If we already have solutions, fine. We will share our experience. If not, it might be the opportunity to launch a joint research. We have a well-rounded questionnaire as a starting point to describe their case. What works? What doesn’t? Scientists usually have existing solutions and their own expertise. Our purpose is not to erase this legacy. But to answer questions of users who try to project themselves in the future. For instance, we could point out that ten years from now, the cost of power could make data centers less affordable. Therefore, the notion of trade-off is worth considering and it might be appropriate to start to study a new solution without further ado.”

In the medium term, Balouek intends to create a road map for assembling these applications in a generic way from different little bricks meant for various tasks. “We try to focus on basic operations such as how to collect data, how to move it. These are primitives that all scientists need at one moment or another. The goal is also to automate as many things as possible so that users won’t have to open up their code repository but rather go through high-level metrics presented in an intelligible way. They will be able to focus on the metrics pertaining to their domain.”

The scope of application actually extends beyond urgent science per se. “At industry level, for instance, there is an interest for the concept of digital twin. Having such a twin of a building coupled with a real time assessment of a fire spread can help firefighters progress through the smoke-filled rooms, reach specific places or fetch specific objects. The application can thus guide the intervention. And there are plenty of such examples where people working for public safety could benefit from this type of tools.”

- [1] STACK is an joint research team of Inria-IMT Atlantique-Nantes University-Orange.

- [2] Read: Harnessing the computing continuum for urgent science, by Balouek, D., Rodero, I. and Parashar, M. in ACM SIGMETRICS Performance Evaluation Review, 2020.

- [3]Read: A distributed multi-sensor machine learning approach to earthquake early warning, by Fauvel, K., Balouek, D., Melgar, D., Silva, P., Simonet, A., Antoniu, G., Costan, A., Masson, V., Parashar, M., Rodero, I. and Termier, A. Outstanding Paper Award in Artificial Intelligence for Social Impact. In Proceedings of the AAAI Conference on Artificial Intelligence, 2020