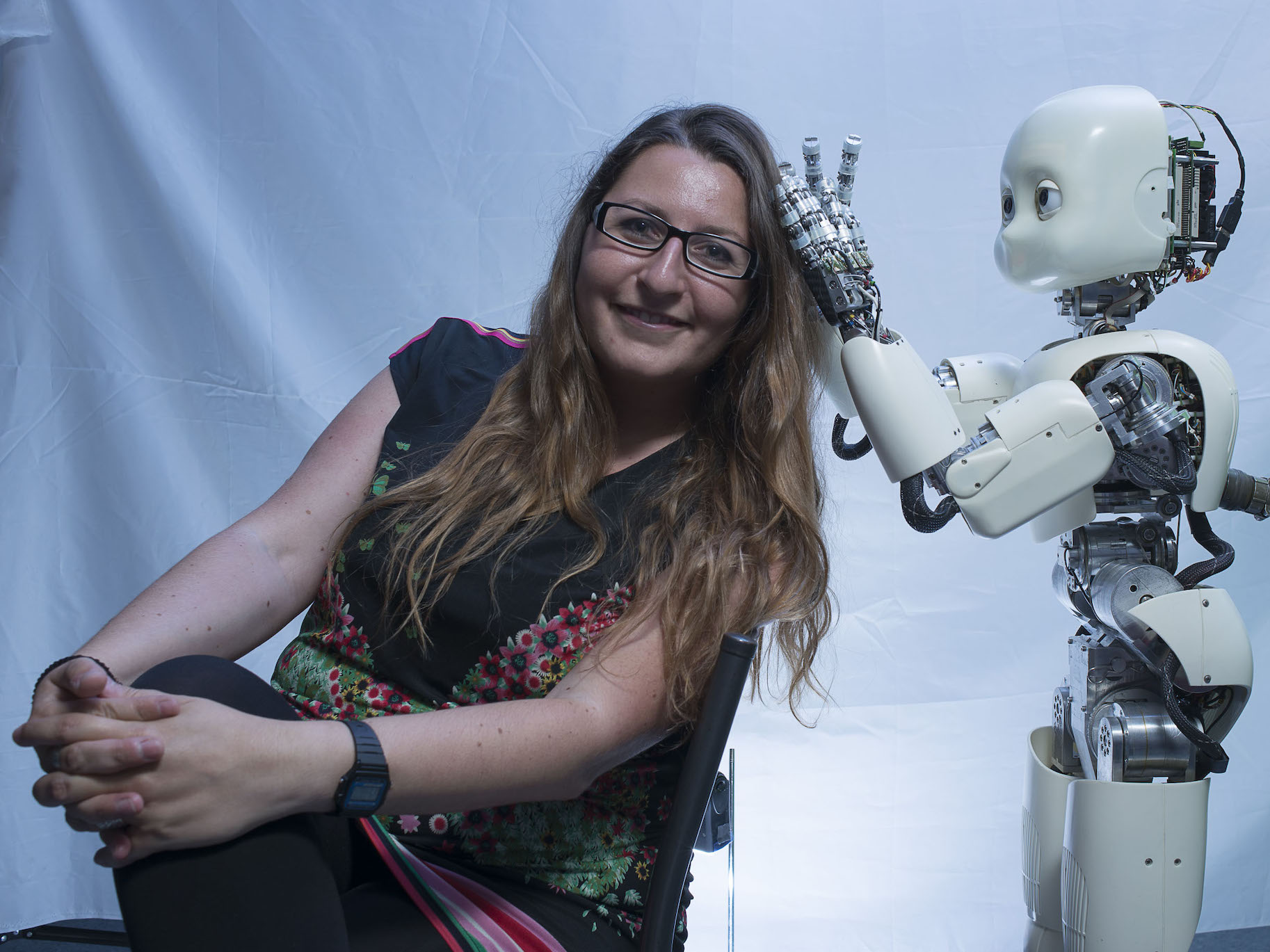

Serena Ivaldi: ‘We want to make robots capable of helping humans better’

Date:

Changed on 17/10/2024

I did part of my studies in Italy, where I obtained a master's degree in automatic control and robotics from the University of Genoa and a doctorate in humanoid robotics from the Italian Institute of Technology, as part of the team that designed the iCub humanoid robot, which I watched grow from strength to strength, working initially on the head, then the arm, then on optimal control of the arm, full body dynamics, etc. I then moved to Paris in 2011 as a postdoctoral fellow at the Pierre et Marie Curie University (now Sorbonne University) to work on learning and human-robot interaction.

I then moved to Paris, in 2011, as a postdoctoral fellow at the Université Pierre et Marie Curie (now Sorbonne University) to work on learning and human-robot interaction. In particular, I worked on taking human aspects into account in physical and social interactions. I then moved to Germany to the Technical University of Darmstadt (TU Darmstadt), as part of a second post-doctorate on machine learning applied to robotics.

I was finally recruited as an Inria research fellow at Loria in 2014, where I helped to set up the LARSEN research team. I'm now research director there and I'm in the process of setting up a new project-team: HUCEBOT, for human-centered robotics.

In HUCEBOT we wanted to develop learning, control and interaction algorithms for robots that help humans.

We are targeting two types of assistance: gesture assistance, with exoskeletons or collaborative robots, in particular through collaboration with the Nancy fire brigade and Nancy Hospital, and remote operation. For the latter, the idea is to develop robots capable of acting efficiently and virtually autonomously at a distance, taking into account their environment and the intentions of the operators.

The long-term objective behind the creation of this project-team is to develop robots that know how to interact in the right way, and thus better meet the needs of humans, particularly in the healthcare field, and why not one day for robots deployed on the Moon.

Our research focuses mainly on machine learning, multi-objective and stochastic optimisation, and real-time control. But our models are fed with information about humans, at the biomechanical level but not only.

We inject machine learning techniques to improve the robot's commands. For gesture execution, we use generative models, mainly based on human movements. The idea is to enable the robot to make the movements in the best possible way.

We also want the robots to be energy-efficient, so that they don't consume too much energy, but above all so that they don't fall over or hurt the human during an interaction. To achieve this, we use parametric optimisation and adaptation.

Finally, we have started to use generative models such as LMM and VLM, so that we can give commands to the robot in natural language, by speaking. This is an important building block for us, which will lead to major changes.

On the mechatronics side, there have been major advances in humanoid robots. Before, humanoid robots were developed on the basis of industrial robot mechatronics. This resulted in robots with complicated mechanics, complicated motors and, above all, very expensive and fragile components. The explosion in the field of direct-drive motors (a type of permanent magnet synchronous electric motor that drives the load directly) has made it possible to equip all quadruped robots at an affordable price. All the technologies that made it possible to develop quadruped robots are now being applied to the new humanoid platforms.

And everything that has been done in this field in recent years is now enabling us to move forward with the development of exoskeletons.

The humanoid robot market is currently driven mainly by China and the United States. European research has some good ideas, but to be competitive we would need to make major investments comparable to those in China and the United States. In Europe, we don't have the resources to produce robots in the same way, but our strength lies in software and mathematical techniques.

With robots, the risky thing is to have methods that can only be used on a single robot. We are therefore working on software and methods to ensure that the software can be used by as many robots as possible, so that it is sustainable over time.

From a mechatronics point of view, the big challenge will be to make robust robots that can ‘leave the laboratory’: if quadruped robots with legs are now used and marketed, it's thanks to several years of robustness tests that they can be used reliably in real-life conditions. Humanoids are not yet at this stage. Knowing how to walk and move ‘in real life’ for long periods on battery power is still a challenge.

Another major challenge for the coming years is to close the loop on voice interaction, i.e. the execution by the robot of a voice command given by a human in natural language. Asking a robot to know how to interact vocally with a person is extremely complicated. The plans that the robots extract from the human request have to be translated into something concrete and ‘mathematical’ for the robot. This requires a high level of abstraction in the reasoning of the robot, which must be capable of knowing what is feasible and what is not. And even when it is feasible, it must be able to do it. We need to get to the point where the robot acts like a person: that it understands a new instruction in context, that it knows how to come up with a plan of action, sometimes creatively, and knows how to execute it autonomously, and knows how to ask for help when it doesn't understand. Once we reach this level of embodied intelligence, the fields of application will explode.